AI Developments: Report #3

- Johnny Shollaj

- Technology, Data

- 28 Aug, 2023

The main highlights for this week have open source initiatives developed by Meta in Code-Llama as well as numerous frameworks assisting LLM development. New surveys have been conducted to keep track of Agents in LLM and Fine-tuning.

How con artists use AI, apps, social engineering to target parents, grandparents for theft

Main Highlight: The article discusses the growing problem of online scams targeting older Americans, revealing that seniors are the group losing the most money to these scams. Utilizing AI, social engineering, and readily available apps, scammers are executing increasingly sophisticated operations, including the "grandparent scam," which cost victims thousands of dollars. A report from the FBI notes that Americans lost over $10 billion to online scams and digital fraud last year.

Terms to Understand: Grandparent Scam, Social Engineering, Ethical Hacker

Sharyn Alfonsi | CBS News

Publication Date: August 27, 2023

Midjourney Takes on Photoshop with Its Own AI Generative Fill-Like Feature

Main Highlight: Midjourney, a leading AI art generator, is launching a new feature called Vary (Region), designed to compete with Adobe Photoshop’s Generative Fill tool. The Vary (Region) feature allows users to regenerate specific parts of an upscaled image, giving them the ability to add, remove, or alter elements within the image.

Terms to Understand: Generative Fill, Vary (Region), Text-to-Image Generation

Siddhartha Samaddar | Beebom

Publication Date: August 27, 2023

Can AI-generated art be copyrighted? A US judge says not, but it’s just a matter of time

Main Highlight: A U.S. federal judge recently denied copyright protection to an artwork generated solely by an AI, citing that current American copyright laws protect only works of human creation. However, the judge acknowledged that copyright law is flexible enough to adapt to technological innovations and suggested that it's just a matter of time before works created by AI could qualify for copyright.

Terms to Understand: Human Involvement Criterion, Copyright Law, AI-Generated Art

John Naughton | The Guardian

Publication Date: August 26, 2023

How To Use AI To Create an Award-Winning Podcast

Main Highlight: The article discusses how the integration of AI and predictive analytics can give podcast creators a competitive edge by tailoring content to their audience, automating content curation, enhancing listener interaction, and personalizing advertising. However, it emphasizes the need to maintain the human element for authenticity and personal touch in podcasts.

Terms to Understand: Predictive Analytics, Artificial Intelligence (AI), Content Strategy

Entrepreneur | Adam Torkildson

Publication Date: August 25, 2023

Using GPT-4 for content moderation

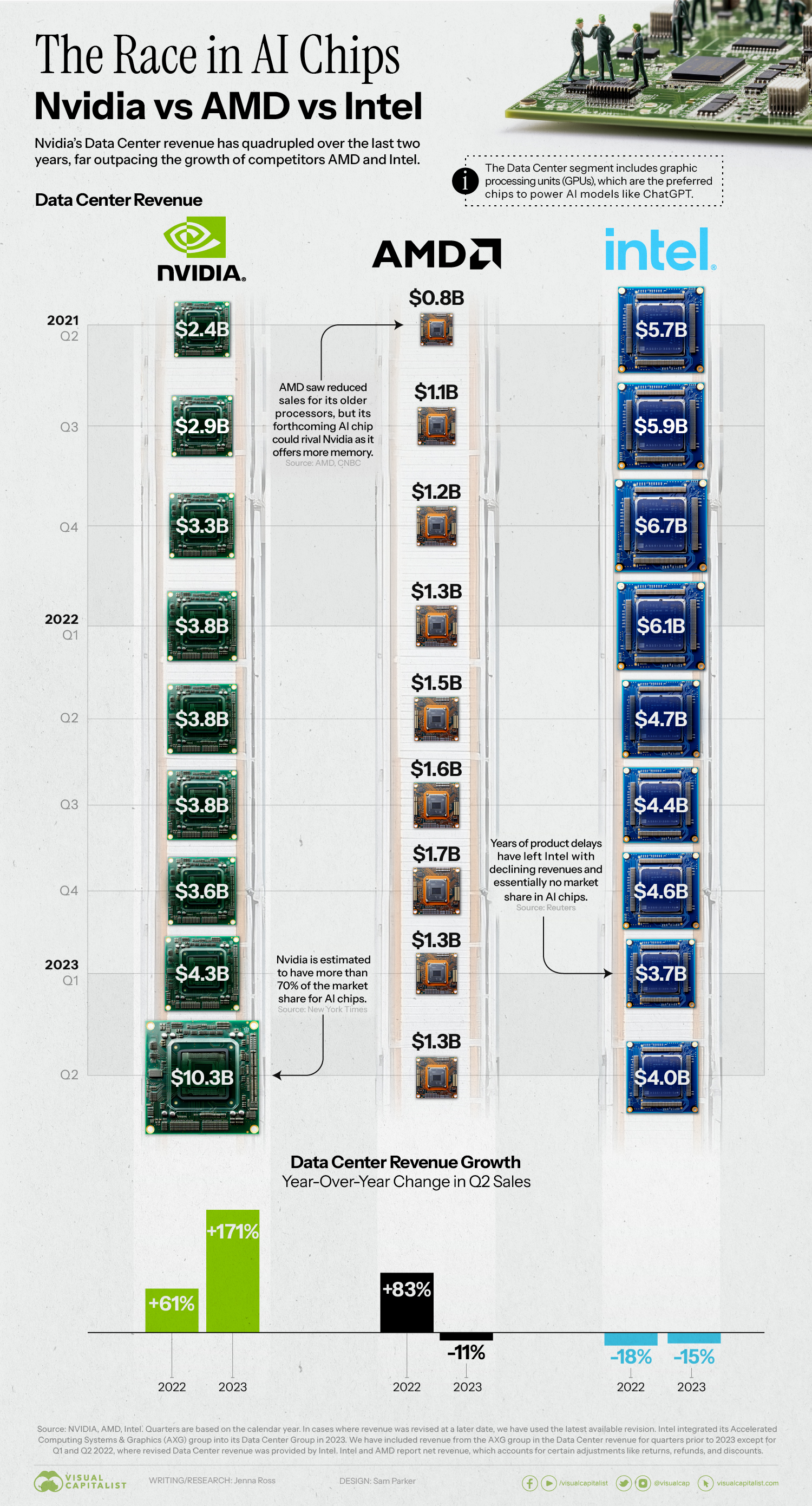

Main Highlight: Nvidia has emerged as a dominant player in AI chip sales, with its Data Center revenue reaching $10.3 billion in Q2 2023 and capturing more than 70% of the AI chip market share. AMD trails behind, but is refocusing on AI with new chips offering more memory. Intel lags significantly, facing declining revenue and nearly no share in the AI chip market.

Terms to Understand: Data Center Revenue, Market Share, AI Chips

Visual Capitalist | Jenna Ross & Sam Parker

Publication Date: August 25, 2023

Research Blogs

Teaching language models to reason algorithmically

Main Highlight: The article discusses a new approach for teaching Large Language Models (LLMs) algorithmic reasoning capabilities through in-context learning and algorithmic prompting. This enables LLMs to solve more complex arithmetic problems and work effectively on out-of-distribution examples, potentially unlocking greater reasoning capabilities in such models.

Terms to Understand: In-Context Learning, Algorithmic Prompting, Out-of-Distribution Generalization

Hattie Zhou, Hanie Sedghi - Google Research

Updated Publication Date: August 8, 2023

Responsible AI at Google Research: Perception Fairness

Main Highlight: Google's Perception Fairness team is actively working on integrating fairness and inclusion into multimodal machine learning systems, including computer vision and generative AI technologies. The team's research spans from addressing system biases to optimizing performance metrics that include fairness, with practical applications influencing Google's products like Search and Google Photos.

Terms to Understand: Multimodal Machine Learning, Perception Fairness, AI Principles

Susanna Ricco and Utsav Prabhu, Google Research

Publication Date: August 25, 2023

Research Papers

Code Llama: Open Foundation Models for Code

Main Highlight: The article introduces Code Llama, a family of large language models designed for code generation and infilling tasks. These models come in different flavors, specialized for Python and instruction-following, and are available in sizes with 7B, 13B, and 34B parameters. They are trained to handle large contexts up to 100k tokens and have shown state-of-the-art performance in code benchmarks like HumanEval and MBPP.

Terms to Understand: Code Llama (family of large language models for code), Infilling (filling in missing parts of code), HumanEval & MBPP (code benchmarks).

Meta

Publication Date: 24 Aug , 2023

ExpeL: LLM Agents Are Experiential Learners

Main Highlight: The paper introduces the Experiential Learning (ExpeL) agent, a novel Large Language Model (LLM) designed for custom decision-making tasks. Unlike traditional LLMs, ExpeL doesn't require fine-tuning or access to model parameters; it learns from experience and past tasks to make informed decisions, thereby improving its own performance over time. The agent has been empirically shown to outperform strong baselines in various domains and exhibits forward transferability of knowledge.

Terms to Understand: Experiential Learning (ExpeL), Large Language Models (LLMs), Forward Transferability

Zhao, Huang, Xu, Lin, Liu, Huang

Publication Date: 20 Aug , 2023

Instruction Tuning for Large Language Models: A Survey

Main Highlight: The paper provides a comprehensive survey on the emerging field of Instruction Tuning (IT) for large language models (LLMs). It delves into the methodologies, dataset construction, model training, applications across various modalities, and critiques of IT. The paper also identifies the advantages and shortcomings of IT, including its ability to make LLMs more controllable and challenges related to the quality of instructions and task understanding.

Terms to Understand: Instruction Tuning (IT), Large Language Models (LLMs), Dataset Construction.

Zhang, Dong et. al

Publication Date: 21 Aug , 2023

Use of LLMs for Illicit Purposes: Threats, Prevention Measures, and Vulnerabilities

Main Highlight: The article underscores the emerging security and safety risks associated with the increasing use of large language models (LLMs) in various sectors. It categorizes these concerns into threats like fraud and malware generation, prevention measures against such threats, and vulnerabilities that may arise from imperfect prevention strategies. The paper aims to raise awareness among both developers and users about these security issues while advocating for peer-review to identify and prioritize relevant concerns.

Terms to Understand: Large Language Models (LLMs), Security Threats, Prevention Measures.

Mozes, He, Kleinberg, Griffin

Publication Date: 24 Aug , 2023

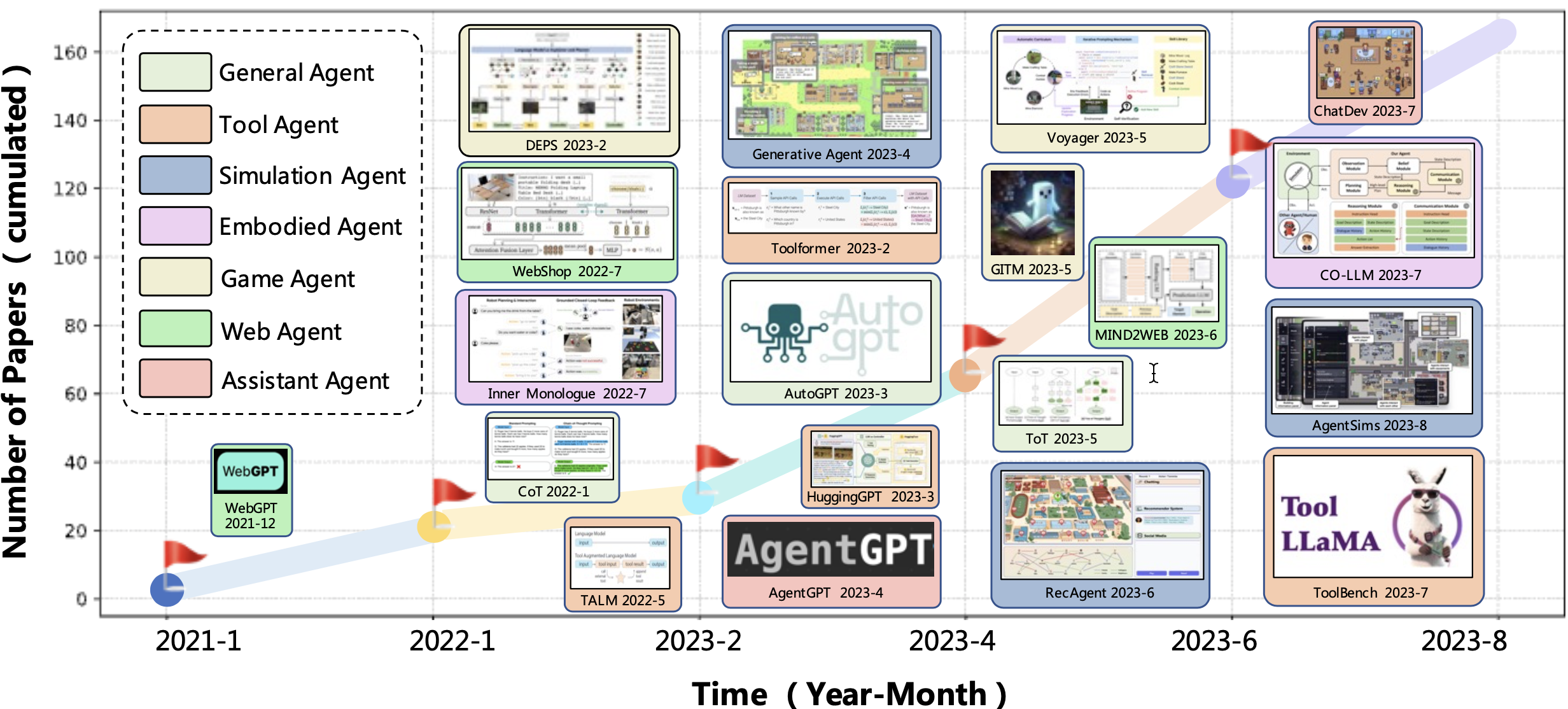

A Survey on Large Language Model based Autonomous Agents

Main Highlight: The article presents a comprehensive survey of the emerging field of autonomous agents based on large language models (LLMs). It categorizes research into three main aspects: agent construction, application across different domains like social science and engineering, and evaluation methods. The paper aims to establish a unified framework for LLM-based agents and identify challenges and future directions in this rapidly evolving field.

Terms to Understand: Large Language Models (LLMs), Autonomous Agents, Unified Framework.

Wang, Ma et.al

Publication Date: 22 Aug , 2023

Prompt2Model: Generating Deployable Models from Natural Language Instructions

Main Highlight: The article introduces Prompt2Model, a new framework designed to create task-specific NLP models based on natural language prompts. This approach bridges the gap between the easy prototyping offered by large language models (LLMs) and the need for efficient, deployable models. Prompt2Model trains specialized models that outperform strong LLMs like gpt-3.5-turbo by an average of 20%, while being up to 700 times smaller, making it conducive for real-world deployment.

Terms to Understand: Prompting, Task-specific models, Model distillation

Viswanathan, Zhao, Bertsch, Wu, Neubig et.al

Publication Date: 23 Aug , 2023

Development

DSPy: The framework for programming with foundation models

Main Highlight: DSPy is a Pythonic framework designed to make working with language models (LMs) and retrieval models (RMs) easier and more efficient. It provides modular, composable techniques for tasks like prompting, fine-tuning, and reasoning. The framework also introduces an automatic compiler that can train LMs on tasks without manual label engineering, making it scalable and adaptive to a variety of tasks.

Terms to Understand: Language Models (LMs), Retrieval Models (RMs), Prompting, Fine-Tuning, Compiler

Author(s): Omar Khattab, Chris Potts and Matei Zaharia

GPT Pilot

Main Highlight: GPT Pilot is a research project aimed at using GPT-4 to automate the coding of production-ready apps. Although it can handle 95% of the coding, the remaining 5% requires human intervention for fine-tuning and debugging. The tool allows step-by-step development, facilitating easier debugging and better code understanding, and it is designed to scale for large and complex applications.

Terms to Understand: GPT Pilot, AGI (Artificial General Intelligence), TDD (Test Driven Development)

Author: Pythagora-io

CodeLlama

Main Highlight: Code Llama is a state-of-the-art family of large language models for code with various specializations, including Python and instruction-following. It offers models with 7B, 13B, and 34B parameters and supports large input contexts and zero-shot instruction following. The technology is aimed at individuals, creators, researchers, and businesses to foster innovation in code-related tasks.

Terms to Understand: Infilling, Zero-Shot Instruction Following, Tokenizers

Author: Meta

Practical Tutorials and Resources

Top 50 Machine Learning Projects for Beginners in 2023

Machine Learning Projects Ideas for Beginners with Source Code in Python 2023-Interesting machine learning project ideas to kick-start a career in machine learning.

Project Pro

Free course on probabilistic foundations for theoretical research in modern data science from the University of California.

Roman Vershynin | University of California, Irvine

Collection of practical LLM guides, papers and tutorials (minus the hype).

Vicki Boykis

Full detailed list of docker commands

FOSS TechNix

LlamaIndex, previously known as GPT Index, is a data framework designed to facilitate the use of Large Language Models (LLMs) in applications requiring private or domain-specific data. The platform offers various tools including data connectors for data ingestion, data indexes for structuring, and multiple types of engines for natural language access to the data.

Various

DeepLearning AI: Finetuning Large Language Models

The course "Finetuning Large Language Models" led by Sharon Zhou, aims to educate participants on the nuances of finetuning Large Language Models (LLMs). Upon completion, students will be proficient in applying finetuning, preparing data for it, and training and evaluating an LLM.

Lamini x DeepLearning