AI Developments: Report #4

- Johnny Shollaj

- Technology, Data

- 02 Sep, 2023

The main highlights for this week have open source initiatives developed by Meta in Code-Llama as well as numerous frameworks assisting LLM development. New surveys have been conducted to keep track of Agents in LLM and Fine-tuning.

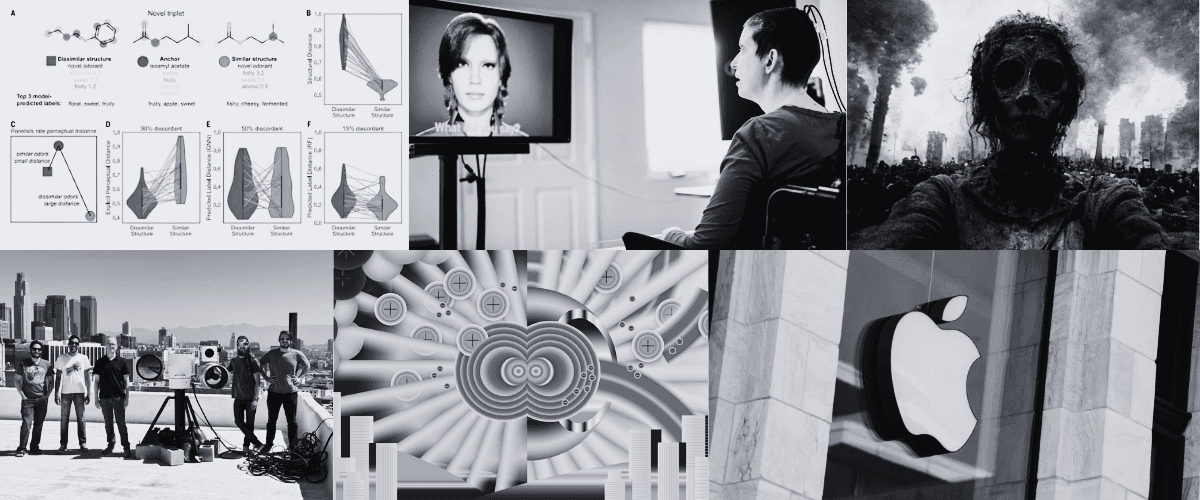

AI 'nose' predicts smells from molecular structures

Main Highlight: Scientists have developed a machine-learning tool that can predict the odor of a molecule based solely on its molecular structure. This breakthrough technology could be a game-changer for synthetic chemists in the food and fragrance industries, providing the ability to screen large numbers of molecules for aroma.

Terms to Understand: Machine Learning, Odor Prediction, Molecular Structure

University of Reading

Publication Date: September 2, 2023

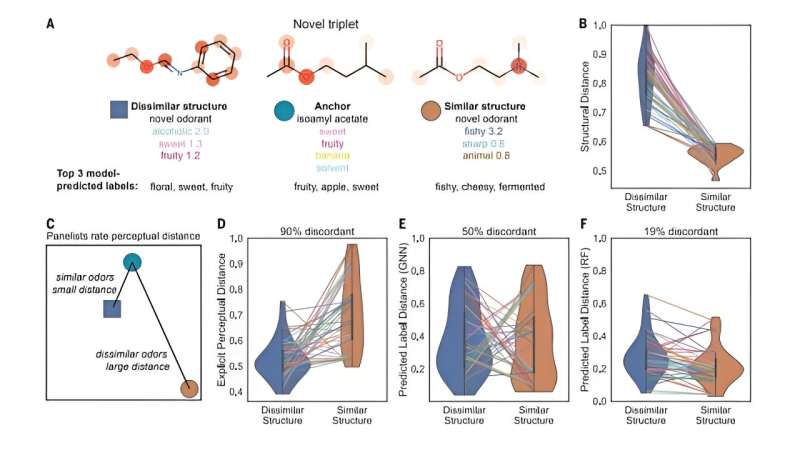

AI tech gives back 'voice' to woman with post-stroke locked-in syndrome

Main Highlight: Researchers from the University of California San Francisco have created a technology that allows people with locked-in syndrome to communicate and express facial emotions via a brain implant and a digital avatar. The system was successfully tested on a woman, enabling her to "speak" and express emotions through the avatar, which used her actual pre-injury voice.

Terms to Understand: Locked-in Syndrome, Brain Implant, Digital Avatar

Corrie Pelc | Medical News Today

Publication Date: August 30, 2023

Amazon increases fees, ChatGPT comes to the enterprise, and Apple announces a press conference

Main Highlight: This week's major tech developments include Teamshares, a startup that's buying up small businesses without succession plans to expand its fintech product line. Zepto became India's first unicorn of 2023 with a valuation of $1.4 billion and plans to IPO in 2025. Google has introduced BigQuery Studio, a comprehensive data management service, while OpenAI is launching an enterprise version of ChatGPT with enhanced privacy and data analysis capabilities.

Terms to Understand: Teamshares, Zepto, BigQuery Studio, ChatGPT Enterprise

Kyle Wiggers | Techcrunch

Publication Date: September 3 , 2023

Fast-tracking fusion energy’s arrival with AI and accessibility

Main Highlight: The U.S. Department of Energy has funded a project led by MIT's Plasma Science and Fusion Center to create a unified data platform for fusion research that can be processed by AI tools. The platform aims to make fusion data more accessible and organized, thereby accelerating scientific discovery and encouraging diversity in the fusion and data science workforce.

Terms to Understand: DoE Funding, Fusion Data Platform, AI-powered Tools

MIT News | Julianna Mullen

Publication Date: September 1, 2023

Research Blogs

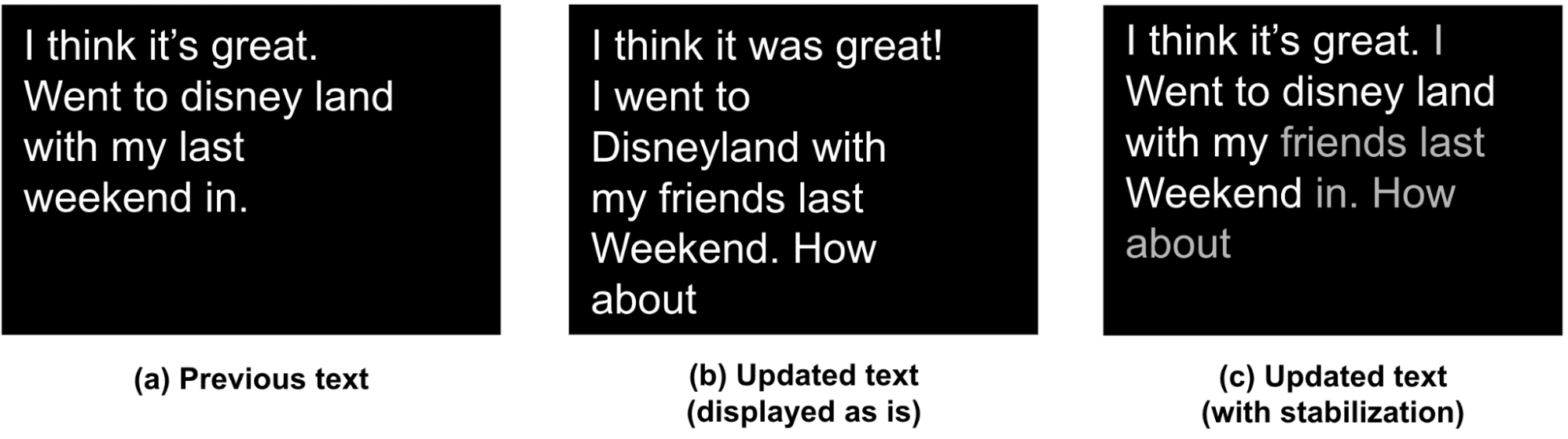

Modeling and improving text stability in live captions

Main Highlight: The paper "Modeling and Improving Text Stability in Live Captions" tackles the issue of text instability in live captions, often manifesting as distracting 'flicker'. The researchers formalized this problem by developing a vision-based flicker metric and then introduced a stability algorithm that uses tokenized alignment, semantic merging, and smooth animation. Their user study of 123 participants showed that the stabilization techniques significantly improved the viewer experience.

Terms to Understand: Text Stability, Flicker Metric, Stability Algorithm

Bahirwani, Xu - Google Research

Updated Publication Date: August 30, 2023

WeatherBench 2: A benchmark for the next generation of data-driven weather models

Main Highlight: Google Research has announced WeatherBench 2 (WB2), an updated benchmark for next-generation data-driven weather models. WB2 aims to speed up the progress of machine learning-based weather forecasts by offering a trusted, reproducible framework for model evaluation. The machine learning models are showing comparable or even better performance than traditional physics-based models, and they can make quick forecasts on inexpensive hardware.

Terms to Understand: WeatherBench 2 (WB2), Machine Learning in Weather Forecasting, Evaluation Framework

- Rasp, Bromberg, Google Research*

Publication Date: August 31, 2023

Research Papers

Vector Search with OpenAI Embeddings: Lucene Is All You Need

Main Highlight: The paper challenges the prevailing notion that a specialized vector store is essential for leveraging deep neural networks in search applications. Using Lucene's Hierarchical Navigable Small-World (HNSW) indexes, the authors demonstrate that existing search infrastructure can efficiently handle vector search with OpenAI embeddings. This suggests that adding a dedicated vector store to an already complex AI stack may not offer a justified cost-benefit advantage.

Terms to Understand: Lucene, Hierarchical Navigable Small-World (HNSW) indexes, Vector Search

Lin, Pradeep, Teofili, Xian

Publication Date: 30 Aug , 2023

Transformers as Support Vector Machines

Main Highlight: The paper establishes a formal link between the optimization geometry of self-attention in transformer models and hard-margin SVM problems. This relationship provides insights into how transformers make decisions when trained with gradient descent. The study reveals that over-parameterization aids in global convergence and the results are validated with experiments across diverse datasets.

Terms to Understand: Self-Attention, Optimization Geometry, Hard-Margin SVM

Tarzanagh, Li, Thrampoulidis, Oymak

Publication Date: 31 Aug , 2023

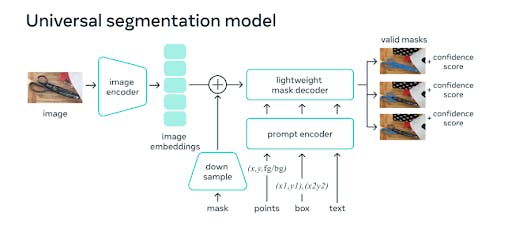

SAM-Med2D: Segment Anything Model

Main Highlight: The paper introduces SAM-Med2D, a specialized adaptation of the Segment Anything Model (SAM) for medical image segmentation. While SAM performed poorly in medical imaging due to a significant domain gap, SAM-Med2D fills this void by incorporating a large-scale medical image dataset of approximately 4.6M images and 19.7M masks. The model is fine-tuned comprehensively and has shown significantly better performance and generalization capabilities across various medical imaging scenarios compared to the original SAM.

Terms to Understand: SAM (Segment Anything Model), SAM-Med2D, Medical Image Segmentation

Cheng, Ye et. al

Publication Date: 30 Aug , 2023

PointLLM: Empowering Large Language Models to Understand Point Clouds

Main Highlight: The paper introduces PointLLM, a groundbreaking initiative that enables Large Language Models (LLMs) to understand and process 3D point clouds. This capability expands LLMs beyond text and 2D visual data, showing superior performance over 2D baselines and even outperforming human annotators in object captioning tasks by over 50%. The model employs a two-stage training strategy using a novel dataset of 660K simple and 70K complex point-text instruction pairs.

Terms to Understand: PointLLM, 3D Point Clouds, Large Language Models (LLMs)

Xu, Wang, Chen, Pang , Lin

Publication Date: 31 Aug , 2023

Bridging the data gap between children and large language models

Main Highlight: The paper discusses the significant gap in data efficiency between human learners, especially children, and large language models (LLMs). It offers three potential reasons for humans' superior data efficiency: evolutionary advantages, rich sensory experience, and the nature of social, interactive language input. The paper also hints at future research paths to improve LLM efficiency, such as using more grounded, interactive training data.

Terms to Understand: Data efficiency, Large Language Models (LLMs), human learning

Frank et.al

Publication Date: 31 Aug , 2023

Development

ChatDev: Create Customized Software with Natural Language Ideas

Main Highlight: ChatDev is a virtual software company made up of intelligent agents serving in various roles like CEO, CTO, Programmer, etc. Its main objective is to offer a highly customizable framework based on large language models for studying collective intelligence. The system is already live with features including customizability for the software development process and both online log and replay modes.

Terms to Understand: ChatDev, Intelligent Agents, Large Language Models

Author(s): OpenBMB

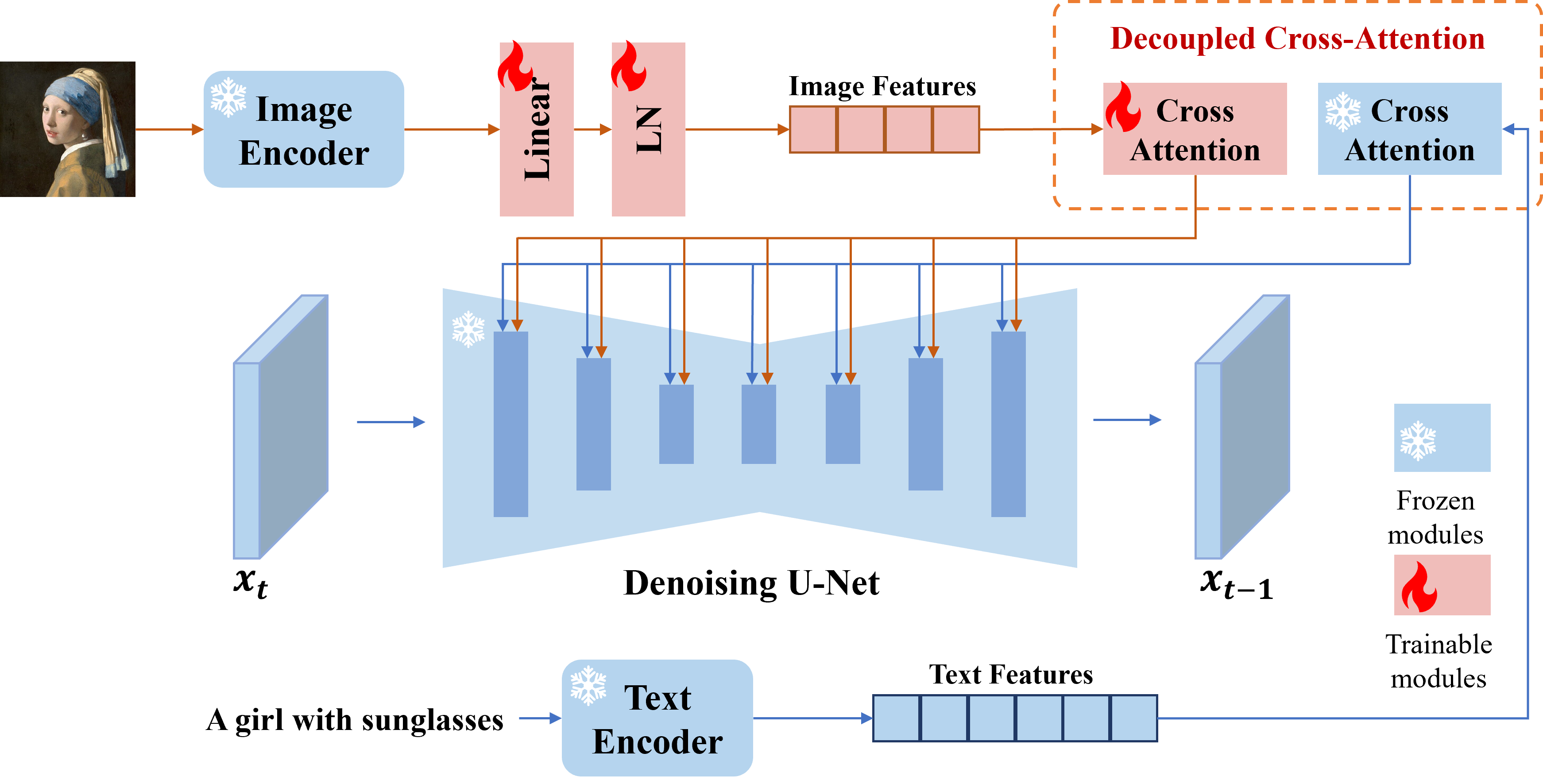

IP-Adapter: Text Compatible Image Prompt Adapter for Text-to-Image Diffusion Models

Main Highlight: The IP-Adapter is a lightweight yet effective module designed to enable text-to-image diffusion models to use image prompts. With just 22M parameters, it performs comparably or even better than fine-tuned image prompt models and supports multimodal image generation. The repository is continuously updated, and it offers various demos to showcase its capabilities, including face image prompts and fine-grained features.

Terms to Understand: IP-Adapter, Text-to-Image Diffusion Models, Multimodal Image Generation

Author: Tencent AI Lab

Graph of Thoughts: Solving Elaborate Problems with Large Language Models

Main Highlight: This is the official implementation of Graph of Thoughts: Solving Elaborate Problems with Large Language Models. This framework gives you the ability to solve complex problems by modeling them as a Graph of Operations (GoO), which is automatically executed with a Large Language Model (LLM) as the engine.

Terms to Understand: Infilling, Zero-Shot Instruction Following, Tokenizers

Author: SPCL

Practical Tutorials and Resources

Pen and Paper Exercises in Machine Learning

This is a collection of (mostly) pen-and-paper exercises in machine learning. The exercises are on the following topics: linear algebra, optimisation, directed graphical models, undirected graphical models, expressive power of graphical models, factor graphs and message passing, inference for hidden Markov models, model-based learning (including ICA and unnormalised models), sampling and Monte-Carlo integration, and variational inference.

Gutmann

A professionally curated list of awesome Conformal Prediction videos, tutorials, books, papers, PhD and MSc theses, articles and open-source libraries.

Valeman

Stanford XCS224U: Natural Language Understanding I Spring 2023

This professional Stanford Online course draws on theoretical concepts from linguistics, natural language processing, and machine learning. Topics include domain adaptation for supervised sentiment, retrieval augmented in-context learning, advanced behavioral evolution, analysis methods, and NLP methods.

Christopher Potts / Stanford

DeepLearning AI: How Business Thinkers Can Start Building AI Plugins With Semantic Kernel

In this course, you’ll learn how to use and create with Microsoft’s open source orchestrator, Semantic Kernel. Along the way you’ll gain skills in getting the most out of LLMs developing prompts, semantic functions, vector databases and using an LLM for planning.

Microsoft x DeepLearning